- Advertising

- Bare Metal

- Bare Metal Cloud

- Benchmarks

- Big Data Benchmarks

- Big Data Experts Interviews

- Big Data Technologies

- Big Data Use Cases

- Big Data Week

- Cloud

- Data Lake as a Service

- Databases

- Dedicated Servers

- Disaster Recovery

- Features

- Fun

- GoTech World

- Hadoop

- Healthcare

- Industry Standards

- Insurance

- Linux

- News

- NoSQL

- Online Retail

- People of Bigstep

- Performance for Big Data Apps

- Press

- Press Corner

- Security

- Tech Trends

- Tutorial

- What is Big Data

Using smartctl and fio to analyze disk health and performance

In this article, we will review a number of Linux cli commands and tools that you can use to interact with the system disks. We will go through rescanning for a newly added disk, monitoring its performance and monitoring the disk health.

SCSI BUS rescan

Let's first start with a little trick that helps you rescan the SCSI bus when adding or removing a disk from the system.

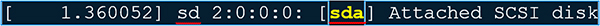

Sometimes when physically adding or removing a disk you get a proper delay from the Linux kernel in sensing the SCSI bus change. If the SCSI Bus refresh works automatically, it usually can be seen in the system logs and it looks something like this.

When no changes on the SCSI bus are seen or it takes a long time you can simply follow the next.

First you must find your host bus number.

grep mpt /sys/class/scsi_host/host?/proc_name

which should return something like this

/sys/class/scsi_host/host6/proc_name:mpt2sas and host6 in this case is the bus number.

The next command can be used to rescan the bus:

echo "- - -" > /sys/class/scsi_host/host6/scan

In the above command the hyphens stand for controller, channel, lun so "- - -" indicates that all controllers, channels, and luns are to be scanned.

Testing disk performance

Let's say that the disk is now visible, you partition it, use it in a raid-group (and you'd better do that), schedule a backup policy for the newly created filesystem (you'd better do that too) and start using it. Soon you'll be interested in your disk performance. And the best tool to do that is fio.

Fio is the most complete tool for disk performance testing and it basically simulates a desired IO workload via a job that one or more threads and can contain quite a lot of parameters.

A few of the most important parameters that can be used are:

- IO type: sequential read/write, random read/write, mixt read and write, random read and write;

- Block size: This represents how large are the chunks for each IO issued. In order to get close to the real application scenario, you can choose to use the block size that the application uses or a range of block sizes.

- IO size: Represents how much data is going to be written or read.

- IO engine: How are the IO issued. It can be regular read/write operations, memory mapping the file, splice, async IO or SCSI generic operations via the SG driver in Linux kernel.

- IO depth: if the IO engine is async, this represents how large is the queue depth for the incoming IO operations.

- Threads or processes: This represents the number of threads that the job will be spread upon.

- Direct IO: Can be set to 0 or 1 where 0 uses the target system buffers and 1 sends the IOs directly to the disk.

A couple of reserved keywords exists in fio and those will be replaced with the system values. For example:

- $pagesize: The architecture page size of the running system.

- $mb_memory: MB of the total memory of the system.

- $ncpus: Number of available CPUs.

These can be used in the command line or in the job file like this:

size=30*$mb_memory

This is also a good practice when testing the environment performance. When issuing workload that goes through the system buffers, make sure that the total size of the IO is a couple of times greater than the total available memory.

ramp_time: This is quite helpful. The workload will be run for this amount of time before logging the performance indicators and it helps stabilize the results. At the beginning of the test, it takes a couple of seconds depending on the underlying system in order to reach a maximum.

Command example:

fio –filename=/dev/sda –name=perftest –ioengine=libaio –iodepth=128 –rw=randrw –bs=4k –direct=1 –size=10*$mb_memory –numjobs=10 –runtime=120 –ramp_time=10

Of course, let’s discuss results now. Fio outputs results for each thread of the job containing information like iops, bandwidth, latency, resources used etc. Besides that, there is also a summary with a lot of information for the entire job.

One of the 85 threads result for a write test with blocksize 4k and io queue depth 128 for a filesize of 10G looks something like this:

write: (groupid=0, jobs=1): err= 0: pid=16022: Fri Feb 1 13:59:39 2019

write: IOPS=48.7k, BW=190MiB/s (200MB/s)(10.0GiB/53788msec)

slat (nsec): min=1054, max=93015k, avg=15478.85, stdev=477763.37

clat (usec): min=3, max=94803, avg=2607.73, stdev=5999.84

lat (usec): min=7, max=94805, avg=2623.60, stdev=6016.23

clat percentiles (usec):

| 1.00th=[ 553], 5.00th=[ 619], 10.00th=[ 644], 20.00th=[ 668],

| 30.00th=[ 693], 40.00th=[ 717], 50.00th=[ 750], 60.00th=[ 775],

| 70.00th=[ 791], 80.00th=[ 816], 90.00th=[ 4293], 95.00th=[20841],

| 99.00th=[24773], 99.50th=[28705], 99.90th=[39584], 99.95th=[51119],

| 99.99th=[82314]

bw ( KiB/s): min=84659, max=685496, per=1.23%, avg=193958.93, stdev=81400.59, samples=107

iops : min=21164, max=171374, avg=48489.33, stdev=20350.19, samples=107

(usec) : 4=0.01%, 10=0.01%, 20=0.01%, 50=0.01%, 100=0.01%

(usec) : 250=0.01%, 500=0.39%, 750=49.14%, 1000=37.64%

(msec) : 2=1.84%, 4=0.89%, 10=1.31%, 20=3.13%, 50=5.60%

(msec) : 100=0.06%

cpu : usr=7.06%, sys=20.96%, ctx=5251, majf=0, minf=893

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwt: total=0,2621440,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=128

And the job summary:

Run status group 0 (all jobs):

WRITE: bw=15.1GiB/s (16.2GB/s), 181MiB/s-202MiB/s (190MB/s-212MB/s), io=850GiB (913GB), run=50728-56455msec

Disk stats (read/write):

zd192: ios=43/621049, merge=0/0, ticks=0/40450, in_queue=40479, util=68.01%

And we can go on like this for quite a while but this summarizes the most important parameters to have in mind when testing the disk performance and simulating a close to real application scenario adapting the numbers to your test case.

Disk Health Monitoring and Stats

After adding your disk to your server, testing its performance you can now start worrying for your disk health.

A useful thing for smart firmware capable disks is to use the Smartmontools tool.

You can use it to see general information about the disk and extract some precious information from there.

An error counter log is present. From here you can see the "age" of the drive as for Gygabytes processed. Another important age indicator is "Accumulated power on time" value and "Accumulated start-stop cycles" but is not always the best way to identify a drive usage over time. A very stressed disk has many Gigabytes processed and a large of ECC errors corrected or not. A value different than 0 in the Total uncorrected errors field that keeps incrementing over time is a good indicator for the disk future failure.

Error counter log:

Errors Corrected by Total Correction Gigabytes Total

ECC rereads/ errors algorithm processed uncorrected

fast | delayed rewrites corrected invocations [10^9 bytes] errors

read: 0 0 0 0 0 1.067 0

write: 0 0 0 0 0 213.089 0

There are a lot of SMART attributes visible but some of them that are Critical to be monitored over time are the following:

- Read Error Rate - It indicates the rate of hardware read errors. ANY NUMBER indicates a problem with either the disk surface or the read head.

- Reallocated Sectors Count - When the hard drive detects a read/write verification error, it marks the sector as reallocated and transfers the data to a spare area. Reallocated sectors are the equivalent of bad sectors. It is also known as remapping.

- Reallocation Event Count - Raw value shows the total number of attempts of data transfer from reallocated sectors to spare areas.

- Current Pending Sector Count - Number of unstable sectors waiting to be remapped. If a successful directIO is finished the value of this counter is decremented.

- Uncorrectable Sector Count - Total number of uncorrectable errors when writing/reading a disk.

- Soft Read Error Rate - Number of off-track errors. Degradation of this parameter may indicate imminent drive failure. If different than 0, the best suggestion is to make a backup.

- Disk Shift - Distance the disk has shifted relative to the spindle. It happens usually due to shock.

If you are not so interested in all the indicators, you can also check the disk only for errors. The below command produces output only if the device returns failing SMART status, or if some of the logged self-tests ended with errors.

smartctl -q errorsonly -H -l selftest /dev/sda

So keep a close eye to the above indicators so you can proactively prevent any disk loss. Although background scans are performed by SMART regularly you can always run short or long smart tests to actively check for any errors.

About the author

Catalin Maita is a Storage Engineer at Bigstep. He is a tech enthusiast with a focus on open-source storage technologies.

Readers also enjoyed:

5 Benefits of Cloud Performance Testing

5 of the Best Books to Put on Your Big Data Reading List for Summer 2016

Leave a Reply

Your email address will not be published.