- Advertising

- Bare Metal

- Bare Metal Cloud

- Benchmarks

- Big Data Benchmarks

- Big Data Experts Interviews

- Big Data Technologies

- Big Data Use Cases

- Big Data Week

- Cloud

- Data Lake as a Service

- Databases

- Dedicated Servers

- Disaster Recovery

- Features

- Fun

- GoTech World

- Hadoop

- Healthcare

- Industry Standards

- Insurance

- Linux

- News

- NoSQL

- Online Retail

- People of Bigstep

- Performance for Big Data Apps

- Press

- Press Corner

- Security

- Tech Trends

- Tutorial

- What is Big Data

The Case for Big Data Deontology

The recent Cambridge Analytica scandal shows us how critical access to data nowadays really is. By all indications, the voters’ own answers to a quiz as well as access to their social network enabled political campaigners to “gently nudge” whole swing states towards Trump through targeted messaging. It’s a case of your data used against you. This was a failure of procedure rather than a security breach or a technical malfunction of some kind hence there is no technical solution to protect against this kind of “attack”.

The recent Cambridge Analytica scandal shows us how critical access to data nowadays really is. By all indications, the voters’ own answers to a quiz as well as access to their social network enabled political campaigners to “gently nudge” whole swing states towards Trump through targeted messaging. It’s a case of your data used against you. This was a failure of procedure rather than a security breach or a technical malfunction of some kind hence there is no technical solution to protect against this kind of “attack”.

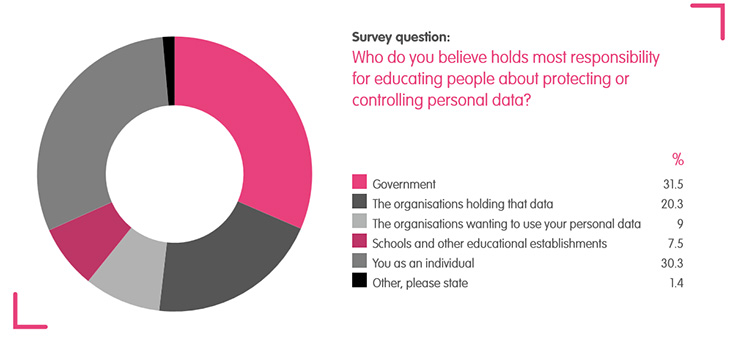

The backlash over this is not going to be clear immediately but will surely persist in the form of mistrust in online services for a long time. If we take it to the extreme, distrust could eventually lead to people evicting technology from their lives altogether. The levels of trust are already dropping:

Source: Digital Catapult (2015) - TRUST IN PERSONAL DATA: A UK REVIEW http://www.digitalcatapultcentre.org.uk/wp-content/uploads/2015/07/Trust-in-Personal-Data-AUK-Review.pdf

The above study also shows that people seem to trust financial services with their data 12 times more than media or retail services. I guess that the reason for this is in the heavily regulated nature of those industries. If that’s the case, one way to increase trust is regulation across the board.

The EU has the GDPR, which will come into effect later this year but the U.S. still relies on a kind of sectoral approach to regulation: Health information is regulated by HIPAA, financial information is regulated under GLBA and FCRA, and marketing is regulated by TCPA, TSR and CAN-SPAM regulations. This lack of unity makes it blurry for most ‘data subjects’ in the U.S. They have no idea what their rights are and therefore think they have none and that corporations or political institutions operate with impunity.

People that don’t understand technology tend to overreact and can become victims of conspiracy theories or scams. There are already alternatives to Facebook promising “no algorithms and no data mining. Ever.” like Vero that further demonize big data use, exacerbating mistrust in these technologies.

I worry that the technological hypochondria which, by all indications will continue to spread, driven in part by the U.S public, will further divide our already pretty segregated society. We’ll have ‘connected’ and ‘unconnected’ people, drifting further apart in views and perception of reality. It is paradoxical that in a world that’s getting increasingly smaller, the rifts between us grow larger.

Unfortunately, regulation is not enough and jumping from one extreme to the other happens all the time and is very dangerous. Take vaccines for example - a degradation of trust in corporations leads parents to reject a scientifically proven way of saving your kids life.

This trust crisis reminds me of Frank Herbert’s Dune where due to a devastating war with intelligent robots, a total ban on “thinking machines”, which included computers of all kinds, was enforced. They still needed to analyze political situations so they had to evolve humans that can handle computationally intensive tasks with the aid of drugs. Interestingly, these mentats had to learn a discipline called “the naïve mind” which is a mind without prejudice and preconception.

Fortunately, the people that use these tools still have some level of scientific background, and I put my trust in them to influence, not necessarily control the way data is used. We really can - at least to some degree - protect the general public from abuse. In the case of Cambridge Analytica the whistleblower Christopher Wylie did this, and without him, the practices would have continued.

My point is that Big Data practitioners must develop and adhere to a kind of professional deontology like in journalism or the medical field. I guess the first such attempt could be considered Google’s “do no evil” principle which is visionary - if you think about it. Who knows, maybe, the world will never know how it helped avoid a data-driven apocalypse. For a while that is.

Leave a Reply

Your email address will not be published.